-

姓 名:胡少军

职 称:副教授

办公室:信息工程学院 #315 室

邮 箱:hsj AT nwsuaf DOT edu DOT cn

基本信息 胡少军,男,西北农林科技大学信息工程学院副教授,博士生导师,陕西省优秀留学回国人才入选者。2009年获岩手大学电子信息专业工学博士学位,2014年在维也纳科技大学计算机图形学与算法研究所做博士后,主要从事计算机图形学、人机交互及智慧农业等方面的研究。担任陕西省图像图形学会理事,《农业机械学报》、《智能化农业装备学报》、《Smart Forestry》等中英文期刊青年编委,国家自然科学基金委青年/面上基金通讯评审专家,中国研究生创新实践系列大赛通讯评委,《农业工程学报》特邀审稿专家,Nicograph2018/2019/2023/2024/2025会议委员,IFIP-ICEC2020会议宣传主席,ISID2021/2022/2023会议委员,ICVR2023/ICVR2024/ICVR2025会议委员,WSCG2024/2025会议委员,第四/五届中国林草计算机应用大会、第六届中国智慧林业大会专题委员会主席,ACM会员及ACM Siggraph、IEEE/RSJ IROS、《IEEE TVCG》、《Computer Graphics Forum》、《ISPRS P&RS》、《IEEE JSTARS》、《Computers & Graphics》等权威会议及期刊审稿人。2007年获日本文部科学省国费奖学金,2014年获欧亚太平洋联盟博士后奖学金,2016年受国家留学基金委资助在东京大学做访问学者。主持国家自然科学基金、陕西省自然科学基金等省部级及以上项目6项。在相关领域知名学术期刊《IEEE RA-L》、《The Visual Computer》、《Computers & Graphics》、《IEEE GRSL》等国际期刊及IEEE ICRA、CGI、SMI等CCF推荐国际会议上发表收录论文20余篇,获授权专利10余项,副主编国家级规划教材和科学出版社规划教材各1部。负责电子信息专业学位课程案例入选2021年陕西省首批专业学位研究生教学案例,参编教材获2020年陕西省优秀教材二等奖,参与获得2020年陕西省高等学校科学技术奖二等奖,指导研究生荣获中国研究生创新实践系列大赛全国奖7项并获评总决赛优秀指导教师3次。

学习经历

1999年9月-2003年7月,西北农林科技大学,计算机科学与技术系,工学学士,指导教师:何东健教授;

2003年9月-2006年7月,西北农林科技大学机电学院,工学硕士,指导教师:何东健教授、耿楠教授;

2006年10月-2009年9月,日本岩手大学电子信息专业,工学博士,指导教师:千叶则茂教授、藤本忠博教授。

工作经历

2009年10月-2010年6月,日本岩手大学计算机图形学研究室,学术研究员,指导教师:千叶则茂教授;

2010年11月-2013年12月,西北农林科技大学,讲师;

2014年10月-2015年2月,维也纳科技大学,计算机图形学与算法研究所,博士后,指导教师:Michael Wimmer教授;

2016年3月-2017年3月,东京大学,计算机科学系,用户界面研究室,访问学者,指导教师:Takeo Igarashi教授;

2013年7月至今,西北农林科技大学,学术型/专业学位型硕士生导师;

2014年1月至今,西北农林科技大学,副教授;

2025年7月至今,西北农林科技大学,学术型/专业学位型博士生导师;

学术兼职

ACM、IEEE及中国农业工程学会会员;陕西省图像图形学会理事;国家自然科学基金委通讯评审专家;中国研究生创新实践系列大赛通讯评委;《农业机械学报》、《智能化农业装备学报》、《Smart Forestry》等期刊青年编委;《ACM SIGGRAPH》、《IEEE TVCG》、《Computer Graphics Forum》、《Computers & Graphics》、《The Visual Computer》、《ISPRS Journal of Photogrammetry and Remote Sensing》、《IEEE GRSL》、《IEEE JSTARS》、《Computers and Electronics in Agriculture》、《Computer Animation & Virtual Worlds》、《Measurement》、《IEEE/RSJ IROS》、《中国图像图形学报》、《农业工程学报》等权威会议或期刊审稿人。

获奖情况

2025年获“陕西省优秀留学回国人才”称号;

2025年获第四届陕西省博士后创新创业大赛暨留学回国人员创新创业大赛银奖;

2024年指导研究生李婷婷等获“第十届中国研究生智慧城市技术与创意设计大赛”全国三等奖;

2024年指导研究生邱事成获校级优秀硕士论文;

2024年指导本科生王铂坚获校级优秀本科毕业论文;

2023年指导本科生邱雨萱获校级优秀本科毕业论文;

2023年指导研究生焦明鑫等获“第五届中国研究生人工智能创新大赛”全国一等奖;

2023年“第五届中国研究生人工智能创新大赛”优秀指导教师;

2023年指导研究生连明慧等获“第五届中国研究生机器人创新设计大赛”全国三等赛;

2022年指导研究生金昊等获“第四届中国研究生机器人创新设计大赛”全国三等奖;

2022年“第三届中国研究生乡村振兴科技强农+创新大赛”优秀指导教师;

2022年指导研究生张誉心等获“第三届中国研究生乡村振兴科技强农+创新大赛”全国三等奖;

2021年负责电子信息专业学位案例入选2021年陕西省专业学位研究生教学案例库;

2021年指导研究生吕艳星等获得研究生创新论坛校级二等奖;

2021年指导研究生冯伟桓等获得研究生人工智能创新大赛校级一等奖;

2021年“第三届中国研究生机器人创新设计大赛”优秀指导教师;

2021年指导研究生金昊等获“第三届中国研究生机器人创新设计大赛”全国三等奖;

2021年指导的研究生张一大获校级“十佳毕业生”荣誉称号;

2020年陕西省高等学校科学技术奖二等奖(排名4/11);

2020年指导的硕士论文获评首届“陕西省计算机学会优秀博/硕士论文”;

2020年指导研究生张馨月等获“第二届中国研究生机器人创新设计大赛”全国三等奖;

2020年获校级第六届“我心目中的好导师”称号;

2019年指导的研究生张馨月获国家奖学金;

2014年获欧亚-太平洋大学联盟(EURASIA-PACIFIC UNINET)博士后奖学金;

2007年获日本文部科学省(MONBUKAGAKUSHO)博士生奖学金。

研究方向 计算机图形学、人机交互、智慧农业 开设课程 承担本科生课程:

[1] C语言程序设计 (校一流本科课程)

[2] 数据结构

[3] 面向对象程序设计(课程负责人,校一流本科课程)

[4] 计算机图形学

[5] 虚拟现实技术

[6] 数字图像处理

[7] 数字孪生流域

[8] 面向对象编程实践

[9] 数据结构与C语言综合实训

承担研究生课程:

[1] 图形学与虚拟现实

[2] 高级计算机三维建模(课程负责人,省级研究生教学案例课程)

[3] 农业工程与信息技术案例

[4] Algorithm Design and Analysis(全英文课程)

副主编教材:

[1] 数字图像处理与分析(科学出版社十四五规划教材). 科学出版社. 2023年11月. ISBN: 978-7-0307-6954-1.

[2] 数字图像处理(第四版, 国家十二五规划教材). 西安电子科技大学出版社. 2022年8月. ISBN: 978-7-5606-6582-5.

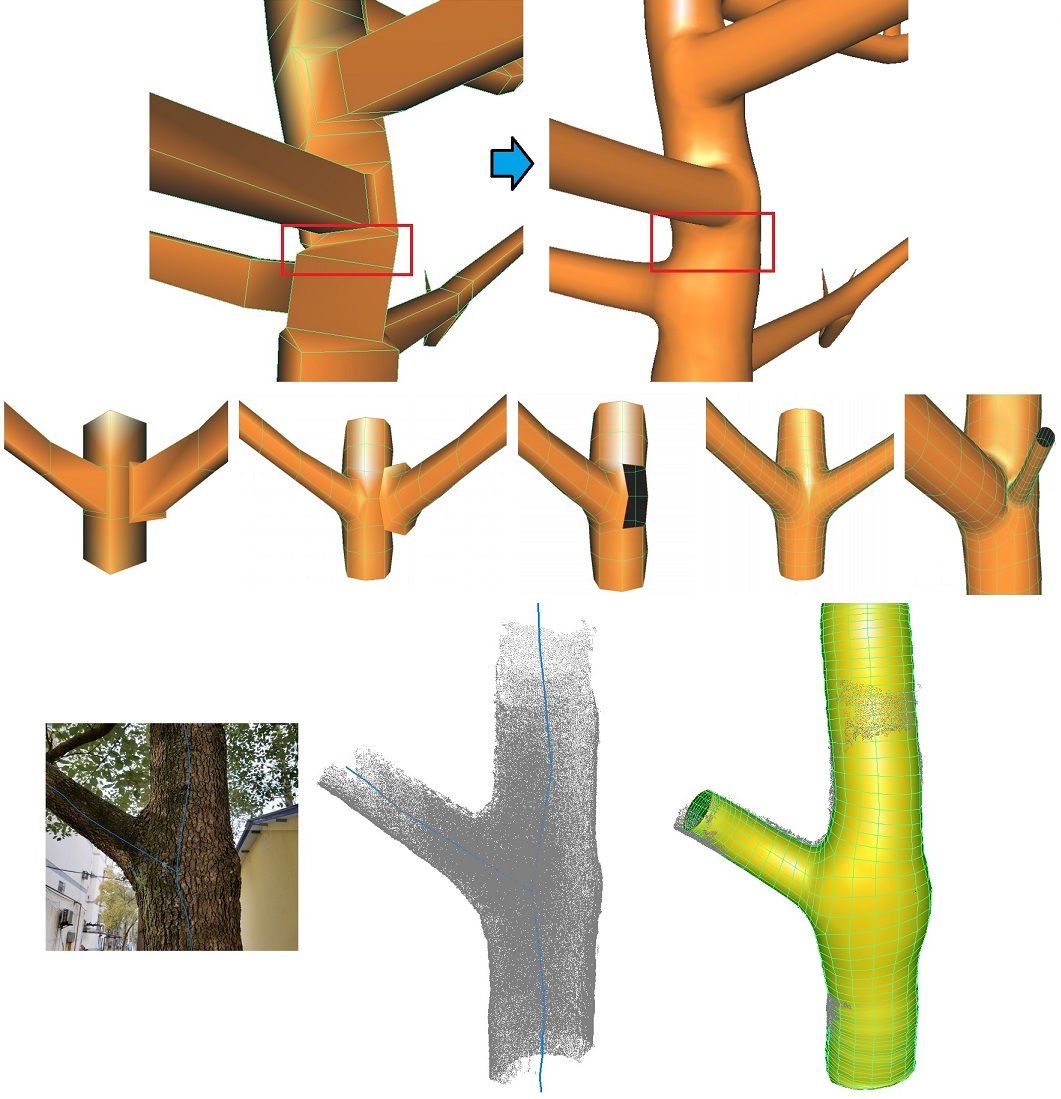

成果展示 [1] 基于图像的树木建模(C&G2024)

[2] 基于数据手套的虚拟采摘系统(ICVR2024) [Link][Link2]

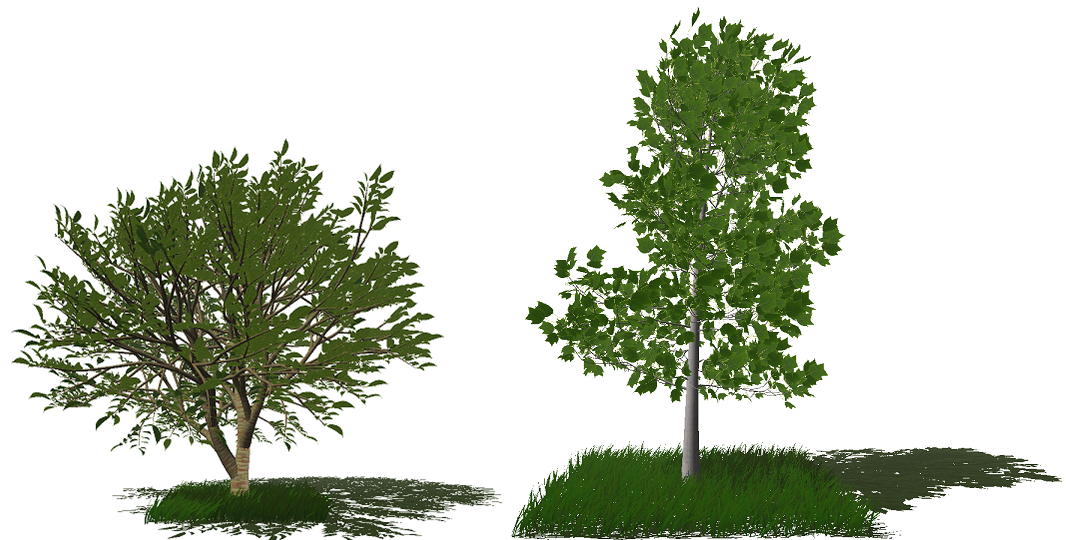

[3] 基于物理的树木动画(CGI2012)

[4] 基于视频驱动的树木动画(CGI2017)

视频链接:[树动视频跟踪][树在风中摇曳动画模拟]

[5] 基于物理的树叶形变模拟(CASA2009)

视频链接:[风场中鹅掌楸树叶变形模拟]

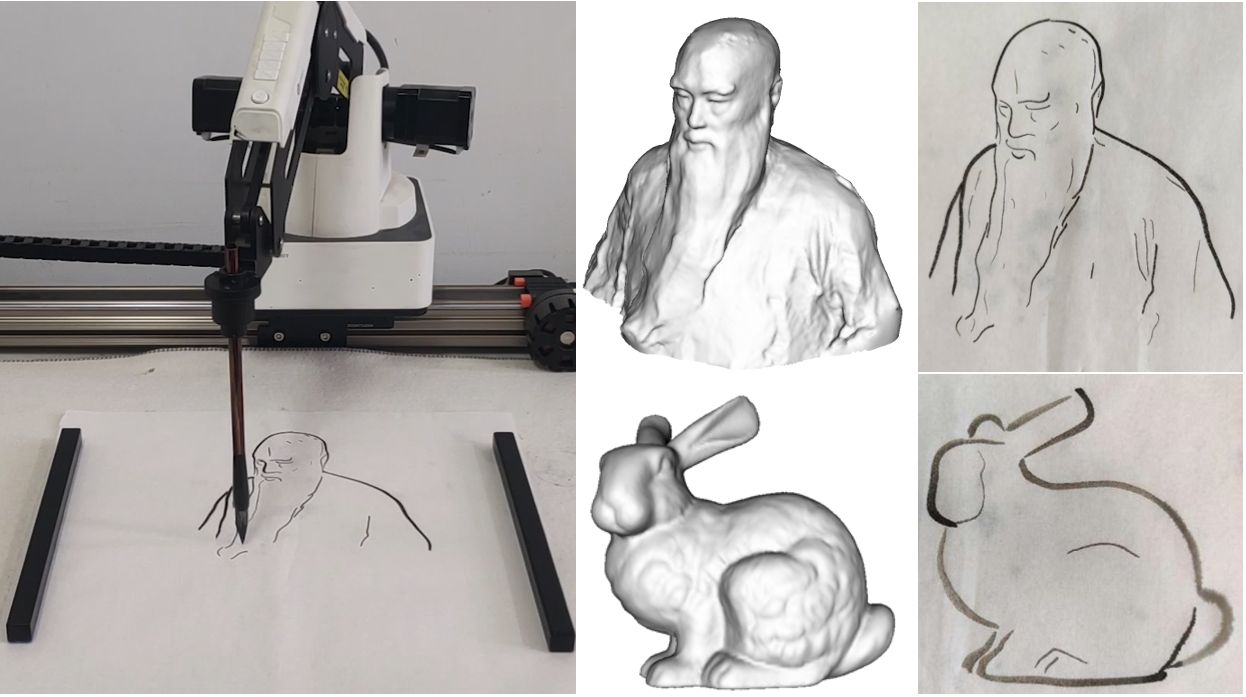

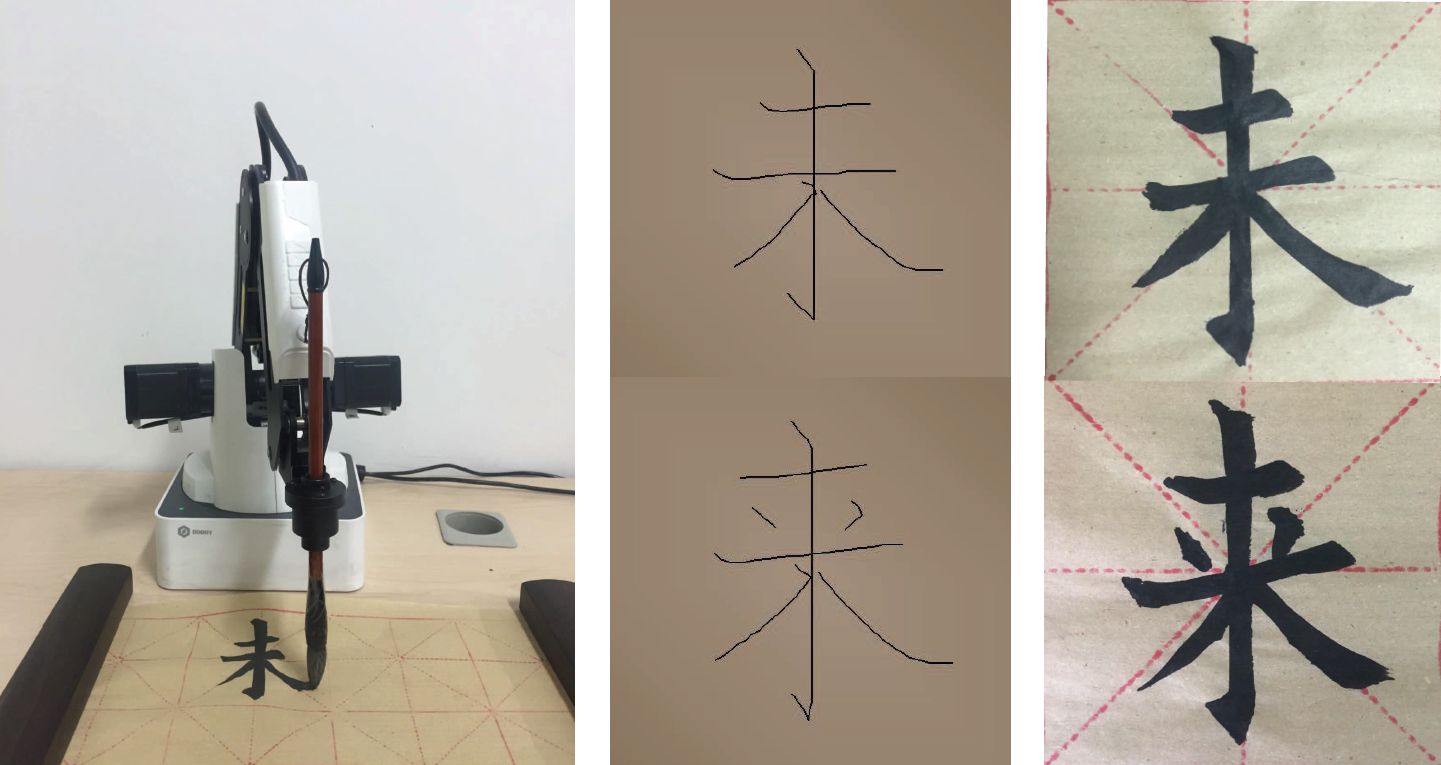

[6] 机械臂水墨画风格绘图(“第三届中国研究生机器人创新设计大赛”全国三等奖)

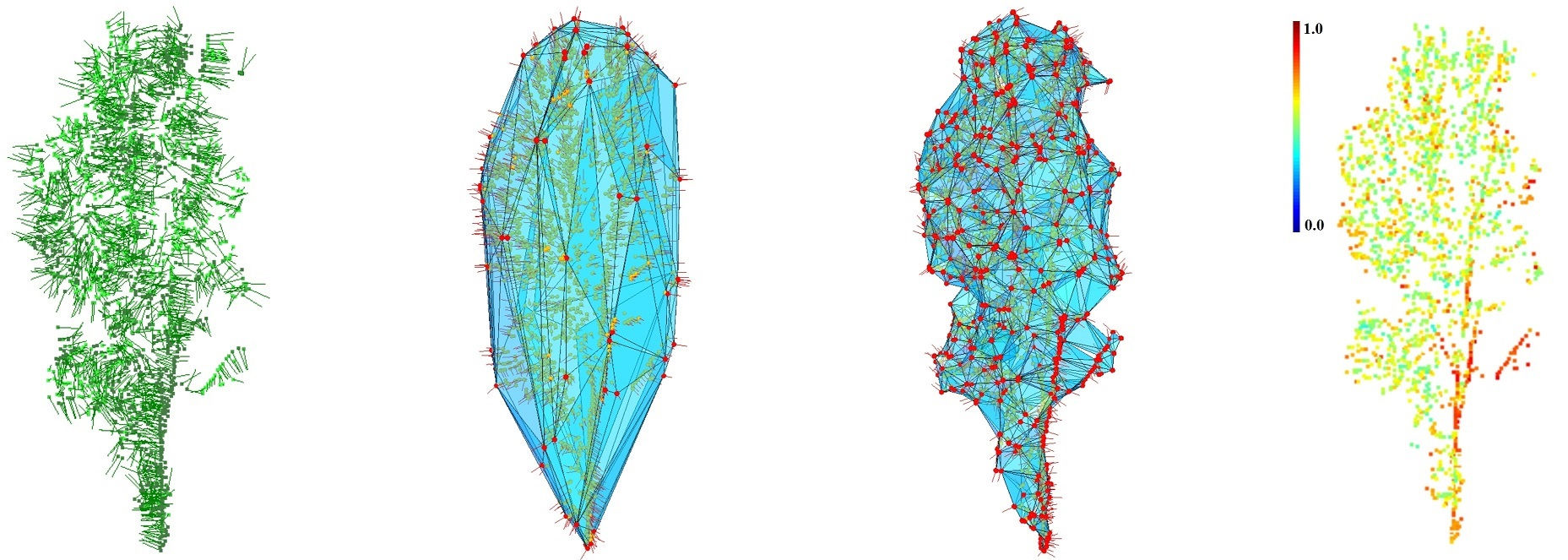

[7] 基于残缺点云的树干三维重建(“第三届中国研究生乡村振兴科技强农+创新大赛”全国三等奖) [Link]

[8] 机械臂楷体书法撰写 (CGI2019,“第二届中国研究生机器人创新设计大赛”全国三等奖)

[9]基于机械臂控制的三维模型自由视角水墨画绘制方法研究 (IEEE ICRA2024,“第四届中国研究生机器人创新设计大赛”全国三等奖)

[10] 基于稀疏点云的树木实时三维重建系统(“第五届中国研究生人工智能创新大赛”全国一等奖, SMI2018, C&G2024)

[11] 三维模型水墨画皴法风格绘制机器人(第五届中国研究生机器人创新设计大赛全国三等奖)

主持项目及学术成果 主持项目

[1] 陕西省秦创原科学家+工程师队伍项目子课题(2025QCY-KXJ-171),2025.1-2027.12;

[2] 基于机载激光雷达点云的输电线树障隐患检测技术(横向项目),2025.1-2025.12;

[3] 陕西省厅市联动重点项目子课题(2022GD-TSLD-53),2022.7-2025.6;[4] 陕西省自然科学基础研究计划(面上项目)(2022JM-363),2022.1-2023.12;

[5] 基于点云的电力塔建模算法与软件研发(横向项目),2021.1-2022.12;

[6] 基于卫星遥感的关中西部地区经济果园种植提取及长势监测研究(示范区科技计划项目子课题),2021.1-2022.12;

[7] 陕西省自然科学基础研究计划(面上项目)(2019JM-370),2019.1-2020.12;

[8] 国家高技术研究发展计划(863计划)课题子任务(2013AA10230402),2013.1-2017.12;

[9] 陕西省自然科学基础研究计划(青年项目)(2015JQ6250),2015.1-2016.12;

[10] 教育部留学回国人员科研启动基金(K308021401),2014.1-2015.12;

[11] 国家自然科学基金项目青年项目(61303124),2014.1-2016.12。

部分发表论文

[1] Weihuan Feng, Mingxin Jiao, Ning Liu, Long Yang, Zhiyi Zhang, Shaojun Hu*. Realistic reconstruction of trees from sparse images in volumetric space. Computers & Graphics. 121:103953, 2024. doi: 10.1016/j.cag.2024.103953. (CCF推荐国际期刊) [Link]

[2] Long Yang, Cheng Zhang, Jiahao Wang, Yijia He, Yan Liu, Shaojun Hu, Chunxia Xiao, and Zhiyi Zhang*. Normal Reorientation for Scene consistency. IEEE Transactions on Visualization and Computer Graphics (TVCG), 2024, Published online, doi: 10.1109/ TVCG.2024.3429401. (CCF推荐国际期刊) [Link]

[3] Hao Jin, Minghui Lian, Shicheng Qiu, Xuxu Han, Xizhi Zhao, Long Yang, Zhiyi Zhang, Haoran Xie, Kouichi Konno, Shaojun Hu*. A Semi-automatic Oriental Ink Painting Framework for Robotic Drawing from 3D Models. IEEE Robotics and Automation Letters (IEEE ICRA2024), 8(10): 6667-6674, 2023. doi: 10.1109/LRA.2023.3311364. (CCF推荐国际会议) [Preprint][Link1][Link2][Video]

[4] Tong Liu, Zhenhua Yang, Shaojun Hu, Zhiyi Zhang, Chunxia Xiao, Xiaohu Guo, Long Yang*. Neighbor reweighted local centroid for geometric feature identification. IEEE Transactions on Visualization and Computer Graphics, 29(2): 1545-1558, 2023. doi: 10.1109/TVCG.2021.3124911. (CCF推荐国际期刊) [Link]

[5] Yanxing Lv, Yida Zhang, Suying Dong, Long Yang, Zhiyi Zhang, Zhengrong Li, Shaojun Hu*. A Convex Hull-Based Feature Descriptor for Learning Tree Species Classification from ALS Point Clouds. IEEE Geoscience and Remote Sensing Letters, 19: 1-5, 2022. doi: 10.1109/LGRS.2021.3055773. (CCF推荐国际期刊). [Preprint][Link]

[6] Zhengyu Huang, Zhiyi Zhang, Nan Geng, Long Yang, Dongjian He, Shaojun Hu*. Realistic Modeling of Tree Ramifications from an Optimal Manifold Control Mesh. ICIG2019. Lecture Notes in Computer Science, vol 11902:316-332, 2019. Springer, Cham. (Accepted as Oral paper by ICIG 2019) [Preprint][Link][Slides]

[7] Xinyue Zhang, Yuanhao Li, Zhiyi Zhang, Kouichi Konno, Shaojun Hu*. Intelligent Chinese Calligraphy Beautification from Handwritten Characters for Robotic Writing. The Visual Computer. 35(6–8): 1193–1205, 2019. doi: 10.1007/s00371-019-01675-w. (CCF推荐国际期刊/会议) [Link][Preprint][Video][Slides].

[8] Chunquan Liang*, Yang Zhang, Yanming Nie, Shaojun Hu. Continuously Maintaining Approximate Quantile Summaries Over Large Uncertain Datasets. Information Sciences. 456: 174-190, 2018. (CCF推荐国际期刊) [Link]

[9] Shaojun Hu*, Zhengrong Li, Zhiyi Zhang, Dongjian He, Michael Wimmer. Efficient tree modeling from airborne LiDAR point clouds. Computers & Graphics. 67: 1-13, 2017. doi: 10.1016/j.cag.2017.04.004. (Invited to present at SMI2018) (CCF推荐国际期刊) [Link][Preprint][Video][Binary][Appendix][Slides]

[10] Shaojun Hu*, Zhiyi Zhang, Haoran Xie, Takeo Igarashi. Data-driven modeling and animation of outdoor trees through interactive approach. The Visual Computer. 33(6-8): 1017-1027, 2017. doi:10.1007/s00371-017-1377-6. (CCF推荐国际期刊) [Link][Preprint][Video][Binary][Appendix][Slides]

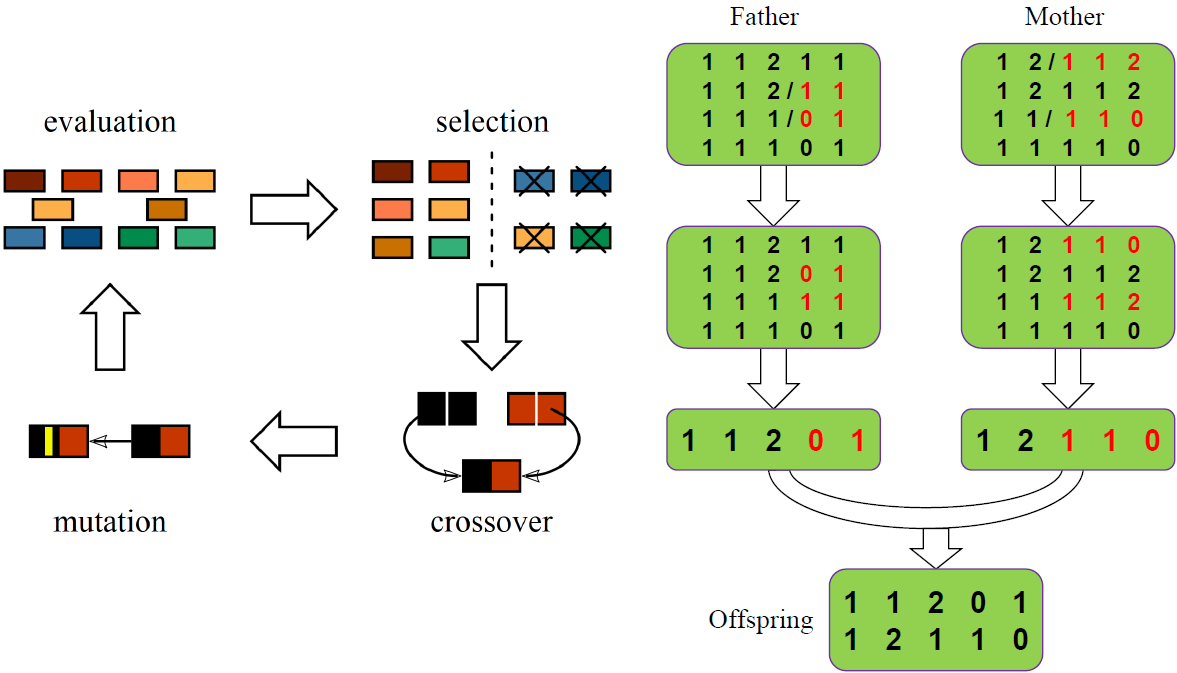

[11] Xiaoqian Jiang*, Shaojun Hu*, Qi Xu, Yujun Chang, Shiheng Tao. Relative effects of segregation and recombination on the evolution of sex in finite diploid populations. Heredity. 111: 505-512, 2013. doi:10.1038/hdy.2013.72. (*These authors contributed equally to this work) (中科院二区) [Link][Binary]

[12] Shaojun Hu*, Norishige Chiba, Dongjian He. Realistic animation of interactive trees. The Visual Computer. 28(6-8): 859-868, 2012. doi: 10.1007/s00371-012-0694-z. (CCF推荐国际期刊) [Link][Preprint][Video1][Video2][Binary][Slides]

[13] Shaojun Hu*, Tadahiro Fujimoto, Norishige Chiba. Pseudo-dynamics model of a cantilever beam for animating flexible leaves and branches in wind field. Computer Animation and Virtual Worlds. 20(2-3): 279-287, 2009. doi: 10.1002/cav.309. (CCF推荐国际期刊/会议) [Link][Preprint][Video1][Video2][Video3][Binary][Slides]

授权发明专利

[1] 胡少军, 陆乐园等. 一种基于深度学习的树木点云补全方法. ZL202210107549.5. 发明专利(已授权). 2025年3月.

[2] 胡少军, 冯伟桓. 一种基于多视图像的体空间减量式树木点云重建方法. ZL202210108374.X. . 发明专利(已授权). 2024年10月.

[3] 胡少军, 张一大等. 一种基于电力塔点云的规则化建模方法. ZL202110606090.9. 发明专利(已授权). 2023年5月.

[4] 胡少军, 李峥嵘等. 一种基于机载激光雷达树点云重建三维树模型的方法. ZL202010469062.2. 发明专利(已授权). 2023年2月.

[5] 胡少军, 何东健等. 一种基于稀疏图像的真实树交互式建模方法. ZL2014100913289. 发明专利(已授权). 2017年6月.

[6] 胡少军, 何东健等. 一种基于简化模态分析法的树动画模拟方法. ZL2014100913274. 发明专利(已授权). 2017年8月.

在读学生 硕士三年级:李婷婷,王治强,刘宇浩,严祉祺

硕士二年级:刘力源,张家豪,姜博轩,黄冠雄

硕士一年级:邱天乐,马铭源,邱严雯,戴锦程

毕业研究生及部分科创学生 2017届:何鹏(协助指导)[学硕: 去哪儿网],付浩文[深圳市茁壮网络股份],张高原[海南航空],卫琦 [选调生]

2018届:于世瑾[上海古鳌电子科技],魏拓[科大讯飞]

2019届:李琰琛[网易游戏],王浩[联想西安研究院],朱超[杭州天阙科技],罗广宇[西安航天天绘数据技术],黄正宇[学硕: JAIST博士]

2020届:张馨月[学硕: 国家电网],张胜君[西安电子科技大学],李莎莎[西安交通大学]

2021届:吕艳星[学硕: 中国银行研发中心],张一大[中国银行研发中心],张玥焜[西安交通大学],赵习之[中国银行研发中心], 李原浩[字节跳动]

2022届:冯伟桓[广联达],陆乐园[广联达],胡龙涛[广联达],崔昊祥[拼多多]

2023届:金昊[学硕: JAIST博士],孙江昊[交通银行],刘春麟[上海人保科技],李中山[上海矽昌微电子],张誉心[国家电网],邱雨萱[中国科学院]

2024届:邱事成[平高集团],焦明鑫[国家电网南瑞集团],王冲[贵州省委组织部],韩旭旭[专硕: 陕师大博士],王铂坚[埃因霍芬理工大学]

2025届:刘宁[农银金科],黄韦毅[中国联通研究院],崔粲宇[中国工商银行],彭星硕(协助指导)[学博: 西安市农业农村局]

-

Name:Shaojun HU

Title:Associate Professor

Office:Room 317, College of Information Engineering

Email:hsj AT nwsuaf DOT edu DOT cn

Researchgate Link: https://www.researchgate.net/profile/Shaojun_Hu

Personal Information Shaojun Hu is an Associate Professor in Northwest A&F University, China. His research interests include Computer Graphics and Human-Computer Interaction. He received Ph.D. degree from the Graduate School of Information Science and Technology at Iwate University, Japan in September 2009 and he was supervised by Prof. Norishige Chiba and Prof. Tadahiro Fujimoto. He worked with Prof. Michael Wimmer from October 2014 to February 2015 at the Institute of Computer Graphics and Algorithms, TU Wien, Austria. Then, he worked with Prof. Takeo Igarashi at the User Interface Research Group, the University of Tokyo as a visiting researcher from March 2016 to March 2017. In 2010, he joined the Department of Computer Science at Northwest A&F University as a Lecturer, and became an Associate Professor in 2014.

He served as the council member of Shaanxi Society of Image and Graphics, the committee member of Nicograph International 2018/2019/2023, IFIP-ICEC2020, ISID2021/2022, the local organizing committee chair of ICVR2023, and the reviewer of "IEEE TVCG", "IEEE GRSL", "Computer Graphics Forum", "The Visual Computer", "Computer & Graphics", "Computer Animation & Virtual Worlds" and "Computers & Electronics in Agriculture". He is also a member of ACM and China Computer Federation (CCF). He is teaching several courses including "C Programming Language", "Object-oriented Programming Using C++", "Data Structures and Algorithms", "Computer Graphics", "Virtual Reality Technology and Applications" and "Advanced 3D Modeling" for undergraduate and graduate students.

Shaojun Hu is running a Computer Graphics lab with Prof. Zhiyi Zhang and Prof. Nan Geng in Northwest A&F University, and has supervised 17 Master students and now he is supervising 13 Master students. He is responsible for several projects including the task of a National 863 Plan [2013AA10230402], Natural Science Foundation of China [61303124], Natural Science Basic Research Plan of Shaanxi [2015JQ6250], [2019JM-370], and the Fundamental Research Funds for the Central Universities [2452017343].

Research Directions Computer graphics, computer animation, natural phenomena Master Students (2019)

Important Dates SPM 2020 (Strasbourg, France) (https://spm2020.sciencesconf.org/)

Abstract for full papers: January 15, 2020

Full paper submission: January 20, 2020

First review notification: February 28, 2020

Revised papers due: March 21, 2020

Final notification: April 10, 2020

Camera ready papers: April 24, 2020

Conference: June 2-4, 2020

CGI 2020 (Geneva, Switzerland)(http://www.cgs-network.org/cgi20/)

Submission Deadline: February 15, 2020

Notification of Acceptance: March 24, 2020

Camera-Ready Journal Papers: April 07, 2020

Conference Dates: JUNE 22-25, 2020

CASA2020 (Bournemouth, UK)(http://casa2020.bournemouth.ac.uk/)

Submission: March 16, 2020

Notification of acceptance: April 20, 2020

Camera ready: May 4, 2020

Conference dates: Jul 1-3, 2020

ACM SIGGRAPH 2020(Washington DC, USA)(https://s2020.siggraph.org/submissions/)

Technical papers (stage 1): 22 JANUARY, 2020

Technical papers (stage 2): 23 JANUARY, 2020

Technical papers (stage 3): 24 JANUARY, 2020

Conference dates: 19-23 July, 2020

ACM SIGGRAPH Asia 2020 (Daegu, South Korea)(https://sa2019.siggraph.org/about-us/sa2020)

Submissions Form Deadline: 19 May 2020 ?

Paper Deadline: 20 May 2020 ?

Upload Deadline: 21 May 2020 ?

Conference: 17 - 20 November 2020 ?

Exhibition: 18 - 20 November 2020 ?

ACM SIGCHI 2021 (Yokohama, Japan)(http://chi2021.acm.org/)

Paper deadline: Sept 10, 2020

Conference dates: May 8 – 13, 2021

ACM UIST 2020 (Minneapolis, USA)(http://uist.acm.org/uist2020/)

Paper Deadline: April 1, 2020, 5PM PDT

Conference dates: October 20-23, 2020

Eurographics 2021 (Vienna, Austria) (https://conferences.eg.org/eg2021/)

Abstract: September 26, 2020 ?

Submission: October 3, 2020 ?

Reviews available: November 21, 2020 ?

Rebuttal: November 28, 2020 ?

Notification to authors: December 12, 2020 ?

Conference date: 3rd to 7th May, 2021

Pacific Graphics 2020 (Wellington, New Zealand)(https://ecs.wgtn.ac.nz/Events/PG2020/)

Abstract submission: 5 June, 2020?

Regular paper submission: 7 June, 2020?

Reviews to authors: 12 July, 2020?

Conference dates: October 26-29, 2020

I3D 2020 (San Francisco, CA, USA)(https://i3dsymposium.github.io/2020/cfp.html)

Paper submission deadline: 13 December 2019

Extension for re-submissions: 20 December 2019

Notification of committee decisions: 10 February 2020

Conference dates: 5-7 May 2020

IEEE VR 2020 (Atlanta, Georgia, USA)(http://www.ieeevr.org/2020/)

Abstracts due: September 3, 2019

Submissions due: September 10, 2019

Final notifications: January 22, 2020

Conference dates: March 22nd - 26th, 2020

SCA 2020 (Montreal, QC, Canada)(http://computeranimation.org/)

Title and Abstract Submission: May 4,2020

Paper Submission: May 7,2020

Paper Notification: June 24,2020

Conference dates: 24-26 August, 2020

IEEE ICRA 2021 (Xi'an China) (http://2021.ieee-icra.org/)

Submission of all contributions: Sept. 15, 2020

Notification of acceptance: Jan. 15, 2021

Conference dates: May 16-22, 2021

VRST 2020 (Ottawa, Canada) (https://vrst.acm.org/vrst2020/index.html)

Submission due: ?

Conference dates: 1-4 November, 2020

Selected Publications A Semi-Automatic Oriental Ink Painting Framework for Robotic Drawing From 3D Models

Hao Jina, Minghui Liana, Shicheng Qiua, Xuxu Hana, Xizhi Zhaoa, Long Yanga, Zhiyi Zhanga,

Haoran Xieb, Kouichi Konnoc, Shaojun Hua*

a. College of Information Engineering, Northwest A&F University, China

b. JAIST, Ishikawa, Japanc. Faculty of Science and Engineering, Iwate University, Morioka, Japan

Abstract

Creating visually pleasing stylized ink paintings from 3D models is a challenge in robotic manipulation. We propose a semi-automatic framework that can extract expressive strokes from 3D models and draw them in oriental ink painting styles by using a robotic arm. The framework consists of a simulation stage and a robotic drawing stage. In the simulation stage, geometrical contours were automatically extracted from a certain viewpoint and a neural network was employed to create simplified contours. Then, expressive digital strokes were generated after interactive editing according to user's aesthetic understanding. In the robotic drawing stage, an optimization method was presented for drawing smooth and physically consistent strokes to the digital strokes, and two oriental ink painting styles termed as Noutan (shade) and Kasure (scratchiness) were applied to the strokes by robotic control of a brush's translation, dipping and scraping. Unlike existing methods that concentrate on generating paintings from 2D images, our framework has the advantage of rendering stylized ink paintings from 3D models by using a consumer-grade robotic arm. We evaluate the proposed framework by taking 3 standard models and a user-defined model as examples. The results show that our framework is able to draw visually pleasing oriental ink paintings with expressive strokes.

Hao Jin, Minghui Lian, Shicheng Qiu, Xuxu Han, Xizhi Zhao, Long Yang, Zhiyi Zhang, Haoran Xie, Kouichi Konno, Shaojun Hu*. A Semi-automatic Oriental Ink Painting Framework for Robotic Drawing from 3D Models. IEEE Robotics and Automation Letters, 8(10): 6667-6674, 2023. doi: 10.1109/LRA.2023.3311364. [Preprint][Link1][Link2][Video]

A Convex Hull-Based Feature Descriptor for Learning Tree Species Classification From ALS Point Clouds

Yanxing Lva, Yida Zhanga, Suying Donga, Long Yanga, Zhiyi Zhanga, Zhengrong Lib, Shaojun Hua*

a. College of Information Engineering, Northwest A&F University, China

b. Beijing New3S Technology Co. Ltd., Beijing, China

Abstract

Classifying tree species from point clouds acquired by light detection and ranging (LiDAR) scanning systems is important in many applications, including remote sensing, virtual reality, and forestry inventory. Compared with terrestrial laser scanning systems, airborne laser scanning (ALS) systems can acquire large-scale tree point clouds from only a single scan. However, ALS point clouds have the disadvantages of low density, uneven distribution, and unclear branch structure, making the classification of tree species from ALS point clouds a challenging task. Recently, deep learning-based classification approaches, such as PointNet++, which can operate directly on 3-D point sets, have been intensively studied in scene classification. However, the classification precision of learning-based approaches for point clouds relies on point coordinates and features, such as normals. Unlike the face normals of regular objects, trees have complex branch structures and detailed leaves, which are difficult to capture using ALS systems. Hence, it might be inappropriate to use the normals of ALS tree points for classification. In this letter, we propose a novel convex hull-based feature descriptor for tree species classification using the deep learning network PointNet++. To evaluate the effectiveness of our approach, three additional feature descriptors (normal descriptor, alpha shape-based descriptor, and covariance descriptor) are also investigated with PointNet++. The results show that the convex hull-based feature descriptor can achieve 86.6% overall accuracy in tree species classification, which is notably higher than the other three descriptors.

Yanxing Lv, Yida Zhang, Suying Dong, Long Yang, Zhiyi Zhang, Zhengrong Li, Shaojun Hu*. A Convex-Hull Based Feature Descriptor for Learning Tree Species Classification from ALS Point Clouds. IEEE Geoscience and Remote Sensing Letters, 2021. doi: 10.1109/LGRS.2021.3055773. [Preprint][Link]

Realistic Modeling of Tree Ramifications from an Optimal Manifold Control Mesh

Zhengyu Huanga, Zhiyi Zhanga, Nan Genga, Long Yanga, Dongjian Heb, Shaojun Hua*

a. College of Information Engineering, Northwest A&F University, China

b. College of Mechanical and Electronic Engineering, Northwest A&F Univerisity, China

Abstract

Modeling realistic branches and ramifications of trees is a challenging task because of their complex geometric structures. Many approaches have been proposed to generate plausible tree models from images, sketches, point clouds, and botanical rules. However, most approaches focus on a global impression of trees, such as the topological structure of branches and arrangement of leaves, without taking continuity of branch rami cations into consideration. To model a complete tree quadrilateral mesh (quad-mesh) with smooth ramifications, we propose an optimization method to calculate a suitable control mesh for Catmull-Clark subdivision. Given a tree's skeleton information, we build a local coordinate system for each joint node, and orient each node appropriately based on the angle between a parent branch and its child branch. Then, we create the corresponding basic ramification units using a cuboid-like quad-mesh, which is mapped back to the world coordinate. To obtain a suitable manifold initial control mesh as a main mesh, the ramifications are classified into main and additional ramifications, and a bottom-up optimization approach is applied to adjust the positions of the main ramification units when they connect their neibour. Next, the first round of Catmull-Clark subdivision is applied to the main ramifications. The additional ramifications, which were selected to alleviate visual distortion in the preceding step, are added back to the main mesh using a cut-paste operation. Finally, the second round of Catmull-Clark subdivision is used to generate the final quad-mesh of the entire tree. The results demonstrated that our method generated a realistic tree quad-mesh effectively from different tree skeletons.

Zhengyu Huang, Zhiyi Zhang, Nan Geng, Long Yang, Dongjian He, Shaojun Hu*. Realistic Modeling of Tree Ramifications from an Optimal Manifold Control Mesh. ICIG2019. Lecture Notes in Computer Science, vol 11902:316-332, 2019. Springer, Cham. (ICIG2019, oral paper acceptance rate = 8.6%). [Preprint][Link][Slides]Intelligent Chinese Calligraphy Beautification from Handwritten Characters for Robotic Writing

Xinyue Zhanga, Yuanhao Lia, Zhiyi Zhanga, Kouichi Konnob, Shaojun Hua*

a. College of Information Engineering, Northwest A&F University, China

b. Faculty of Engineering, Iwate University, Japan

Abstract

Chinese calligraphy is the artistic expression of character writing and is highly valued in East Asia. However, it is a challenge for non-expert users to write visually pleasing calligraphy with his or her own unique style. In this paper, we develop an intelligent system that beautifies Chinese handwriting characters and physically writes them in a certain calligraphy style using a robotic arm. First, we sketch the handwriting characters using a mouse or a touch pad. Then, we employ a convolutional neural network to identify each stroke from the skeletons, and the corresponding standard stroke is retrieved from a pre-built calligraphy stroke library for robotic arm writing. To output aesthetically beautiful calligraphy with the user's style, we propose a global optimization approach to solve the minimization problem between the handwritten strokes and standard calligraphy strokes, in which a shape character vector is presented to describe the shape of standard strokes. Unlike existing systems that focus on the generation of digital calligraphy from handwritten characters, our system has the advantage of converting the user-input handwriting into physical calligraphy written by a robotic arm. We take the regular script (Kai) style as an example and perform a user study to evaluate the effectiveness of the system. The writing results show that our system can achieve visually pleasing calligraphy from various input handwriting while retaining the user's style.

Xinyue Zhang, Yuanhao Li, Zhiyi Zhang, Kouichi Konno and Shaojun Hu*. Intelligent Chinese Calligraphy Beautification from Handwritten Characters for Robotic Writing. The Visual Computer. 35(6–8): 1193–1205, 2019. doi: 10.1007/s00371-019-01675-w. Accepted by CGI2019 (acceptance rate = 21.6%). [Preprint][Link][Video][Slides]Efficient Tree Modeling from Airborne LiDAR Point Clouds

Shaojun Hua*, Zhengrong Lib, Zhiyi Zhanga, Dongjian Hec, Michael Wimmerd

a. College of Information Engineering, Northwest A&F University, China

b. Beijing New3S Technology Co.Ltd., China

c. College of Mechanical and Electronic Engineering, Northwest A&F University, China

d. Institute of Computer Graphics and Algorithms, Vienna University of Technology, Austria

Abstract

Modeling real-world trees is important in many application areas, including computer graphics, botany and forestry. An example of a modeling method is reconstruction from light detection and ranging (LiDAR) scans. In contrast to terrestrial LiDAR systems, airborne LiDAR systems – even current high-resolution systems – capture only very few samples on tree branches, which makes the reconstruction of trees from airborne LiDAR a challenging task. In this paper, we present a new method to model plausible trees with fine details from airborne LiDAR point clouds. To reconstruct tree models, first, we use a normalized cut method to segment an individual tree point cloud. Then, trunk points are added to supplement the incomplete point cloud, and a connected graph is constructed by searching sufficient nearest neighbors for each point. Based on the observation of real-world trees, a direction field is created to restrict branch directions. Then, branch skeletons are constructed using a bottom-up greedy algorithm with a priority queue, and leaves are arranged according to phyllotaxis. We demonstrate our method on a variety of examples and show that it can generate a plausible tree model in less than one second, in addition to preserving features of the original point cloud.

Shaojun Hu*, Zhengrong Li, Zhiyi Zhang, Dongjian He, Michael Wimmer. Efficient tree modeling from airborne LiDAR point clouds. Computer & Graphics, 2017. doi: 10.1016/j.cag.2017.04.004 (Invited to talk at SMI2018). [Link][Video][Binary][Appendix][Slides]

Acknowledgments

We would like to kindly thank Prof. Takeo Igarashi and the anonymous reviewers. This work was supported by the National 863 Plan [2013AA10230402], NSFC[61303124], NSBR Plan of Shaanxi [2015JQ6250], and Eurasia-Pacific Uninet Post-Doc Scholarship from OEAD.Data-driven Modeling and Animation of Outdoor Trees Through Interactive Approach

Shaojun Hua*, Zhiyi Zhanga, Haoran Xieb, Takeo Igarashib

a. College of Information Engineering, Northwest A&F University, China

b. Graduate School of Information Science and Technology, The University of Tokyo, Japan

Abstract

Computer animation of trees has widespread applications in the fields of film production, video games and virtual reality. Physics-based methods are feasible solutions to achieve good approximations of tree movements. However, realistically animating a specific tree in the real world remains a challenge since physics-based methods rely on dynamic properties that are difficult to measure. In this paper, we present a low-cost interactive approach to model and animate outdoor trees from photographs and videos, which can be captured using a smartphone or handheld camera. An interactive editing approach is proposed to reconstruct detailed branches from photographs by considering an epipolar constraint. To track the motions of branches and leaves, a semi-automatic tracking method is presented to allow the user to interactively correct mis-tracked features. Then, the physical parameters of branches and leaves are estimated using a fast Fourier transform, and these properties are applied to a simplified physics-based model to generate animations of trees with various external forces. We compare the animation results with reference videos on several examples and demonstrate that our approach can achieve realistic tree animation.

Shaojun Hu*, Zhiyi Zhang, Haoran Xie, Takeo Igarashi. Data-driven modeling and animation of outdoor trees through interactive approach. The Visual Computer, 2017. doi:10.1007/s00371-017-1377-6. (Accepted by CGI2017, acceptance rate = 20%) [Link][Preprint][Video][Binary][Appendix][Slides]

Acknowledgments

We thank Hironori Yoshida, Seung-tak Noh and the anonymous reviewers. This work was supported by NSFC [61303124], National 863 Plan [2013AA10230402] and NSBR Plan of Shaanxi [2015JQ6250].Motion Capture and Estimation of Dynamic Properties for Realistic Tree Animation

Shaojun Hua, Peng Hea, Dongjian Heb*

a. College of Information Engineering, Northwest A&F University, China

b. College of Mechanical and Electronic Engineering, Northwest A&F University, China

Abstract

The realistic animation of real-world trees is a challenging task because natural trees have various morphology and internal dynamic properties. In this paper, we present an approach to model and animate a specific tree by capturing the motion of its branches. We chose Kinect V2 to record both the RGB and depth of motion of branches with markers. To obtain the three-dimensional (3D) trajectory of branches, we used the mean-shift algorithm to track the markers from color images generated by projecting a textured point cloud onto the image plane, and then inversely mapped the tracking results in the image to 3D coordinates. Next, we performed a fast Fourier transform on the tracked 3D positions to estimate the dynamic properties (i.e., the natural frequency) of the branches. We constructed static tree models using a space colonization algorithm. Given the dynamic properties and static tree models, we demonstrated that our approach can produce realistic animation of trees in wind fields.

Shaojun Hu, Peng He, Dongjian He*. Motion capture and estimation of dynamic properties for realistic tree animation. AniNex2017, Bournemouth, U.K., 2017. [Preprint][Link]Relative Effects of Segregation and Recombination on the Evolution of Sex in Finite Diploid Populations

Xiaoqian Jianga,b,e, Shaojun Huc,e, Qi Xud, Yujun Changa,b and Shiheng Taoa,b

a. College of Life Science, Northwest A&F University, China

b. Bioinformatics Center, Northwest A&F University, China

c. College of Information Engineering, Northwest A&F University, China

d. College of Animal Science and Technology, Yangzhou University, China

e. These authors contributed equally to this workAbstract

The mechanism of reproducing more viable offspring in response to selection is a major factor influencing the advantages of sex. In diploids, sexual reproduction combines genotype by recombination and segregation. Theoretical studies of sexual reproduction have investigated the advantage of recombination in haploids. However, the potential advantage of segregation in diploids is less studied. This study aimed to quantify the relative contribution of recombination and segregation to the evolution of sex in finite diploids by using multilocus simulations. The mean fitness of a sexually or asexually reproduced population was calculated to describe the long-term effects of sex. The evolutionary fate of a sex or recombination modifier was also monitored to investigate the short-term effects of sex. Two different scenarios of mutations were considered: (1) only deleterious mutations were present and (2) a combination of deleterious and beneficial mutations. Results showed that the combined effects of segregation and recombination strongly contributed to the evolution of sex in diploids. If deleterious mutations were only present, segregation efficiently slowed down the speed of Muller’s ratchet. As the recombination level was increased, the accumulation of deleterious mutations was totally inhibited and recombination substantially contributed to the evolution of sex. The presence of beneficial mutations evidently increased the fixation rate of a recombination modifier. We also observed that the twofold cost of sex was easily to overcome in diploids if a sex modifier caused a moderate frequency of sex.

Xiaoqian Jiang1, Shaojun Hu1, Qi Xu, Yujun Chang, Shiheng Tao. Relative effects of segregation and recombination on the evolution of sex in finite diploid populations. Heredity. 111: 505-512, 2013. doi:10.1038/hdy.2013.72. [Link][Binary]

Acknowledgments

Special thanks to Baolin Mu for his help in improving the speed of our computer program. We are grateful to the members at the Bioinformatics Center of Northwest A&F University for their generosity in providing their computer clusters to run our simulations. We also thank three anonymous reviewers for their constructive comments.

Realistic Animation of Interactive Trees

Shaojun Hua*, Norishige Chibab, Dongjian Hec

a. College of Information Engineering, Northwest A&F University, China

b. Dept. of Computer and Information Sciences, Iwate University, Japan

c. Mechanical and Electronic Engineering, Northwest A&F University, China

Abstract

We present a mathematical model for animating trees realistically by taking into account the influence of natural frequencies and damping ratios. To create realistic motion of branches, we choose three basic mode shapes from the modal analysis of a curved beam, and combine them with a driven harmonic oscillator to approximate Lissajous curve which is observed in pull-and-release test of real trees. The forced vibration of trees is animated by utilizing local coordinate transformation before applying the forced vibration model of curved beams. In addition, we assume petioles are flexible to create natural motion of leaves. A wind field is generated by three-dimensional fBm noises to interact with the trees. Besides, our animation model allows users to interactively manipulate trees. We demonstrate several examples to show the realistic motion of interactive trees without using pre-computation or GPU acceleration. Various motions of trees can be achieved by choosing different combinations of natural frequencies and damping ratios according to tree species and seasons.

Shaojun Hu*, Norishige Chiba, Dongjian He. Realistic animation of interactive trees. The Visual Computer, 2012. doi: 10.1007/s00371-012-0694-z. (Accepted by CGI2012, acceptance rate = 18%) [Link][Preprint][Video1][Video2][Binary][Slides]

Acknowledgments

The authors would like to thank anonymous reviewers for their helpful suggestions. This work was partially supported by the Doctoral Start-up Funds (2010BSJJ059), the Fundamental Research Funds (QN2011135) of Northwest A&F University, and the National Science & Technology Supporting Plan of China (2011BAD29B08).

Pseudo-dynamics Model of a Cantilever Beam for Animating Flexible Leaves and Branches in Wind Field

Shaojun Hua*, Tadahiro Fujimotoa, Norishige Chibaa

a. Faculty of Engineering, Iwate University, 4-3-5 Ueda, Morioka, Japan

Abstract

We present a pseudo-dynamics model of a cantilever beam to visually simulate motions of leaves and branches in a wind field by considering the influence of natural frequency (f0) and damping ratio (e). Our pseudo-dynamics model consists of a static equilibrium model, which can handle the bending of a curved beam loaded by an arbitrary force in three-dimensions, and a dynamic motion model that describes the dynamic response of the beam subjected to turbulence. Using the static equilibrium model, we can apply it to controlling the free bending of petioles and branches. Furthermore, we extend it to a surface deformation model that can deform some flexible laminae. Based on a mass spring system, we analyze the property of dynamic response of a cantilever beam in turbulence with various combinations of f0 and e, and we give some guidelines to determine the combination types of branches and leaves according to their shapes and stiffness. The main advantage of our techniques is that we are able to deform curved branches and some flexible leaves dynamically by taking account of their structures. Finally, we demonstrate that our proposed method is effective by showing various motions of leaves and branches with different model

Shaojun Hu*, Tadahiro Fujimoto, Pseudo-dynamics model of a cantilever beam for animating flexible leaves and branches in wind field. Computer Animation and Virtual Worlds, 2009. doi: 10.1002/cav.309. (Accepted by CASA2009, acceptance rate = 33%) [Link][Preprint][Video1][Video2][Video3][Binary][Slides]

Acknowledgments

The authors would like to thank anonymous reviewers for their helpful suggestions. This work was supported by the Ministry of Education, Science, Sports and Culture, Japan with Grant No. 19300022.